5 Modern Tech Innovations That Are Racist As Hell

We take technology for granted nowadays because of how invisible and commonplace it is. Nobody likes to think about the algorithms behind their cat videos or porn because it's all weird big brain jargon anyway that only eggheads can decipher.

But technology can make life a living nightmare for some less privileged groups, like people of color, as a fair bit of today's AI technology is actually very racially biased. After all, a human had to make that robot or self-driving car, so it's only natural that human biases would wind up in the code, like ...

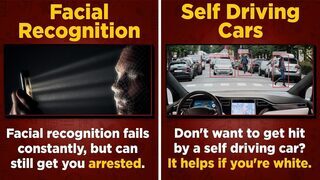

Self-Driving Cars Are More Likely To Hit Black People

Don't Miss

Nowadays, with all of the congestion, gas prices, and overall upkeep costs of keeping a vehicle, it seems like driving just doesn't seem worth it anymore. That is until science found a way to seemingly make it even easier with self-driving car technology.

On the surface, this might not look so bad; after all, who wouldn't want an AI robot as their own personal driver servant, but there's some evidence that suggests that this might not be such a hot idea, as self-driving AI tech is a bit racist.

In 2019, there was a study carried out by the Georgia Institute of technology to determine innate racial biases in AI programming. The way the study worked is that they used something called the Fitzpatrick scale, which is basically the standard scientific metric for measuring skin tones. Turns out we can scientifically prove just how white somebody is without using metrics like mayonnaise consumption or Imagine Dragons albums owned.

The scale was then used to create models based on a database of various images of pedestrians to see which skin tones were detected more often than the other. As you may have guessed, the results were a bit harrowing. On average, dark-skinned people were more unlikely to be detected by five percent more than white people. What world is this when even robots are racist?

That's not all; self-driving AI may have to deal with a multitude of potential ethical dilemmas, like deciding which person to hit and who not to. If an AI car is tasked with determining whether to hit a white or black person, you can sort of see how this would get real messy fast. The odds are that the white person is more likely going to survive this encounter. It is a problem that is currently being worked on as self-driving technology develops even further, but the path to scientific advancements might be paved with some non-white blood.

Fitness Trackers And Apple Watches Don't Work Properly For Dark Skin

Fitness trackers can be a pretty good way of getting your lazy ass into shape by actually keeping track of your progress over time. It's a kind of beneficial technology that also happens to be somewhat exclusionary to non-white people, as the tech sometimes just doesn't work as it should for darker skin tones.

Every single heart rate tracker device for consumers on the market depends on optical sensors tech to properly monitor your body's vitals at any given moment. Like seemingly a lot of technology nowadays, this has a habit of not actually working too well for people of color. Normally, heart rate monitors like the ones you see in the hospital operate with infrared lights, which aren't as blocked by melanin as, say ... green lights, which fitness trackers overwhelmingly use because companies are cheapasses and skimp out on better alternatives.

Because of this, a lot of fitness products on the market are just plain ineffective for anyone, not white as a sheet. The big problem here is that the technology is not accounting for the ways different skin tones absorb and react to light, meaning this is entirely an oversight by indifferent companies who don't bother fixing the issue.

Also, the Food and Drug Administration is apparently continuously pressured by big companies to use the same flawed, biased data they collect in medical research, which could lead to a lot of unfortunate implications and problems if the bias isn't accounted for. It seems that the best solution, for now, is to just simply be born white.

Babysitting AI And Predictive Policing Racially Profile

Humans have long had great fears about AI robot overlords taking over the world and turning us all into obedient slaves, but the actual terrifying reality of AI is a lot closer to home than you think. Even simple things like getting a babysitter are subject to harmful forces of 2001 style artificial intelligence and good ol' fashioned racism.

Predictim is a tech company whose focus is primarily artificial intelligence development for several applications, like behavior prediction that white people can use to keep scary people of color away from their kids. Predictim's Babysitter background check application allows parents to do just that -- by offering an extensive investigation of would-be babysitters, complete with a facial scan because why not.

The way it supposedly works is that the algorithms collect data points, which are culled from a number of sources, like criminal records and even social media, which is all put together into a risk assessment report that tells you if any potential babysitters are a potential threat (see: non-white).

A few test runs from people like Gizmodo's Brian Merchant report that even something as innocuous as cussing on Facebook is likely to make one more threatening than the other. Even worse, they tested the software with two friends, one Black and one white, and the Black friend was deemed even riskier, despite not having any incriminating record or social media posts, outside of the heinous crime of having melanin.

And predictive tech like Predictim has seen use in other industries too, like predictive policing -- because regular policing just wasn't bad enough. There are scores of predictive policing tech being used by law enforcement like the LAPD right now, which uses location-based algorithms that comb through data from crime rate trends to even your gender and age to determine when a crime will happen. This tech overwhelmingly casts crime predictions for non-white neighborhoods compared to white ones, and the algorithms exhibit enormous racial bias. While some researchers want to root this bias out of the tech, it's probably a significantly better idea to do away with it entirely. We've seen Minority Report.

Facial Recognition Disproportionately Targets Black People For Law Enforcement

Biometrics is one of those things that is all around you, but you just can't see it. If you happen to be white, this might not affect you all that much, but if not, it can open itself to a world of hell and discrimination.

Facial recognition technology is already in full use by police, mainly to compare mugshots and driver's licenses to properly identify a criminal. However, that same tech is also often used to arrest protestors at an alarming rate, like in 2015 by police to find Baltimore protesters after police officers killed Freddie Gray.

The use of technology to hunt down black activists has a long history, with the FBI specifically targeting activists over the past few years with advanced surveillance technology. Because as we know, people wanting basic human rights are clearly the greatest threat to the United States.

The US National Institute of Standards and Technology conducted an investigation last year and confirmed what we've all already assumed, that facial recognition tech overwhelmingly discriminates against non-white people. They used images from mug shots, visas, and immigration applications for the study to see if algorithms could correctly identify criminals. Turns out the tech backfired, producing significantly more false positive matches for Black and Asian faces, with Black women receiving the most false positives.

Microsoft Has An "Avoid Ghetto" GPS Patent

As a tech giant company, Microsoft has a controversial history so big that it has its own Wikipedia page. They've done everything from terrible labor practices to child labor lawsuits, yet somehow doesn't seem fit with resting on its garbage company laurels and decided to instead invest in subtly racist GPS technology.

Back in 2012, it was revealed that Microsoft had a patent for a GPS technology that allowed pedestrians to avoid "high crime" areas, which was immediately dubbed the "avoid ghetto" patent due to the implications that "high crime" = "non-white neighborhood." And when your patent uses shady, slippery, racially loaded phrases like "economically challenged areas," it's not hard to see why.

The way it works is that the patent details the use of compiled crime statistics and data to determine the 'safest' path for a walker to take. If high crime rates are reported in an area, they will be steered towards another. On this surface, this seems beneficial and pretty innocuous, but crime statistics are often misleading. Many neighborhoods of color are victim to over-policing, false arrests, and wrongful convictions, which work hand in hand to inflate those statistics, giving a false impression that a neighborhood is extremely dangerous.

This potential technology can actually give the wrong idea to white people that Black people are just lurking in the bushes, waiting to steal their iPhone and HBO Max subscription at any second. Of course, if you're a white pedestrian, you're significantly less likely to be by those neighborhoods, to begin with. Clearly, the better solution here is to simply program a GPS that steers people away from places with high white-collar crime rates, like Wall Street.

Top image: Scharfsinn, Sp3n/Shutterstock